AI

AI

AI

AI

AI

AI

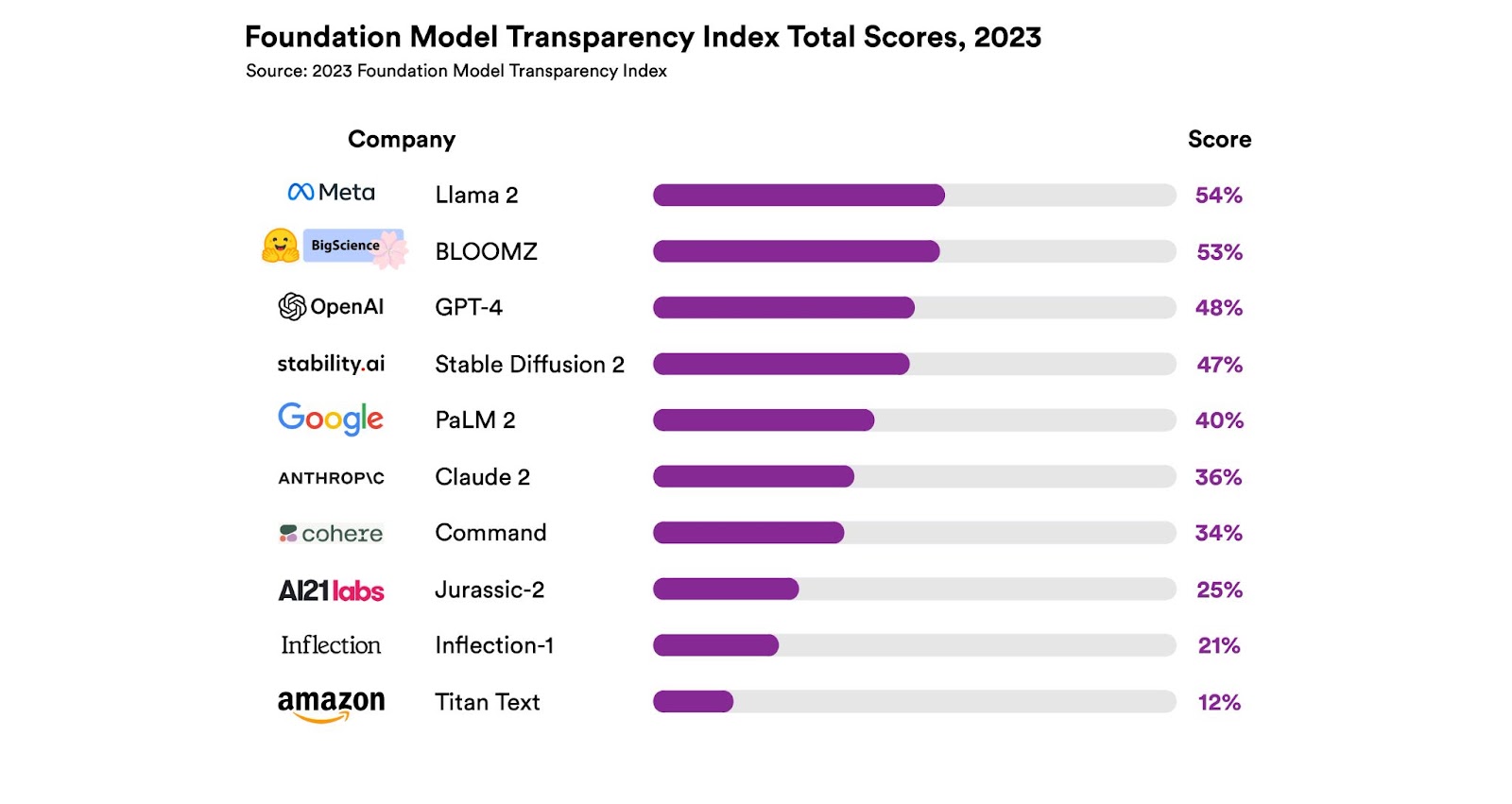

Researchers from Stanford University today published a report looking at the transparency of the most popular foundational artificial intelligence models built by companies like OpenAI LP and Google LLC, declaring that none of them are particularly open.

The authors stated that none of the most prominent developers of AI models, including companies such as Meta Platforms Inc., whose Llama 2 model topped the list, have released sufficient information on their potential impact on society. They urged them to reveal more about the data and human labor that goes into training their models.

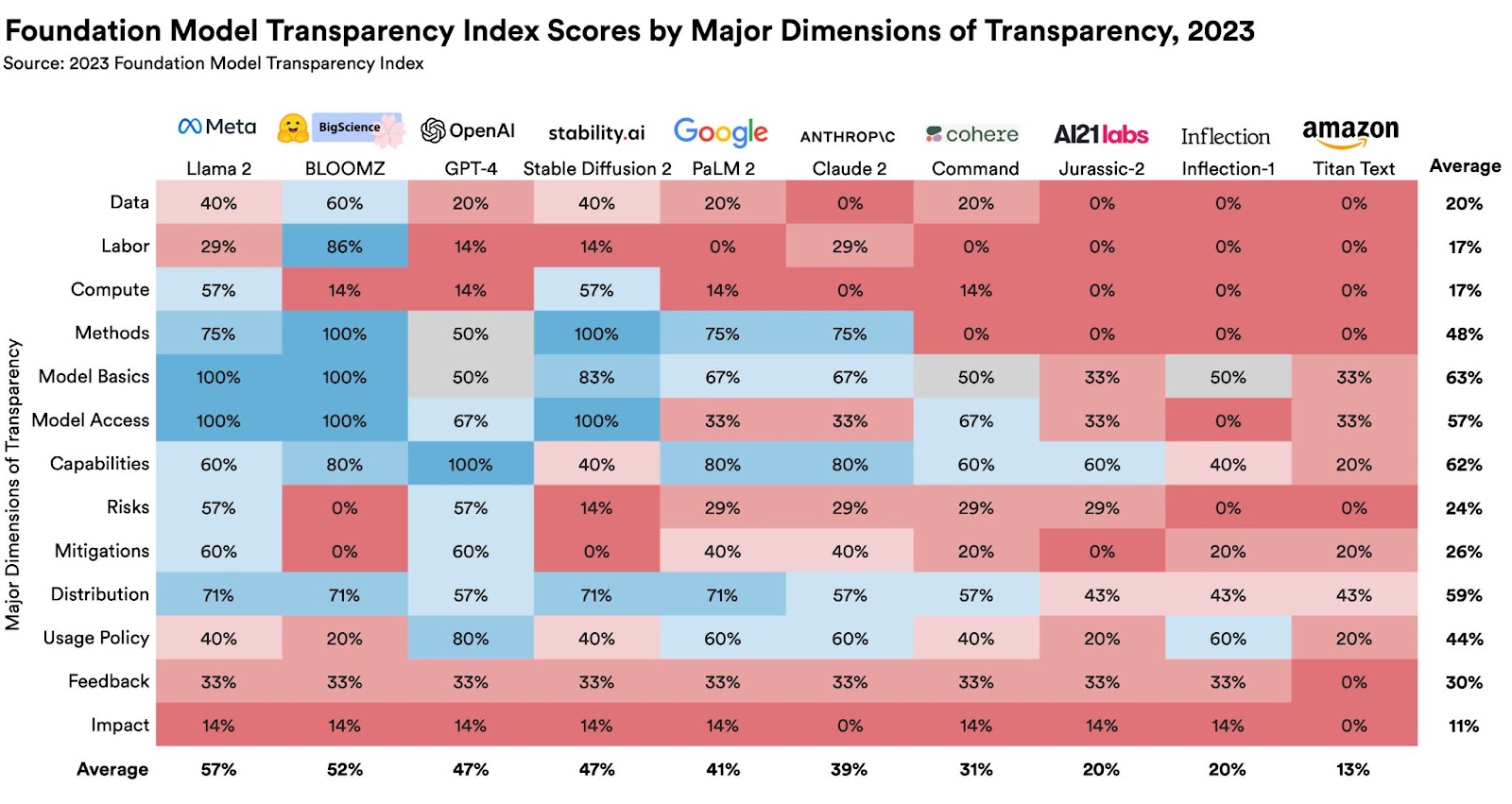

The Foundation Model Transparency Index is the work of Stanford’s Human-Centered Artificial Intelligence research group. According to the authors, they compiled their rankings by creating metrics that look at how much the model creators disclose about their work, and how people use their systems. As mentioned, Meta’s Llama 2 was ranked as the most transparent with a 54% score, followed by BigScience’s BloomZ with 53% and OpenAI’s GPT-4, with 48%.

Other models in the rankings include Stability AI Ltd.’s Stable Diffusion, Anthropic PBC’s Claude, Google’s PaLM 2, Cohere Inc.’s Command, AI21 Labs Inc.’s Jurassic-2, Inflection AI Inc.’s Inflection and Amazon Web Services Inc.’s Titan.

The results are somewhat disappointing in that none of the models achieved very high marks, though Stanford’s researchers admitted in a blog post announcing the index that transparency can be a broad concept that’s hard to define. To compile the report, they created 100 indicators for information about how the models were built, the way they work and how they’re being used.

They scraped all of the publicly available data on each model and then gave them a score for each indicator. The rankings also took into account whether or not the companies disclosed their partners and third-party developers, if their models use private information, and various other factors.

The researchers said Meta’s open-source Llama 2 came in first with 54% because that company had previously released research around how it created the model. BloomZ, which is also open source, came in just shy of Llama-2. The results suggest that open-source models have a definite edge in terms of transparency.

OpenAI scored 47% despite its opaque design approach. According to the researchers, OpenAI rarely publishes any of its research and does not disclose the data sources it uses to train GPT-4.

However, there is a lot of public information about how GPT-4 works from OpenAI’s partners. The company works with numerous enterprises that have integrated GPT-4 into their own applications and services, and many of these have provided information.

The researchers’ main criticism is that not even the open-source models provide any information about their societal impact, including where users can direct complaints regarding privacy, copyright and bias.

Constellation Research Inc. Vice President and Principal Analyst Andy Thurai told SiliconANGLE he isn’t shocked to see such low transparency ratings as most foundational model creators are intentionally secretive. “OpenAI is very clear about the transparency of its models — it won’t ever be transparent about GPT-4,” Thurai said. “This is because it doesn’t want competitors to catch up and compete, and it doesn’t want additional questions on how enterprises use its models.”

The analyst said it was more surprising that the open-source LLMs scored so low in terms of transparency. But by and large, he said most LLM creators take the same approach, advising customers to use them at their own risk and trying to limit their liabilities, even though it is to the detriment of their growth.

“Black box models and decision-making is one of the reasons why AI hasn’t gained as much traction as it should have over the years,” Thurai said. “By continuing on this path, it’s likely large organizations will continue to shy away from using them. The lack of transparency also makes it tricky for policymakers to create guidelines on the proper usage of AI, as they don’t know what is involved in making those models.”

Stanford researcher Rishi Bommasani, who co-authored the study, said the purpose of the index is to create a reliable benchmark that can be used by governments and companies. It comes as the European Union moves toward enacting an Artificial Intelligence Act that will establish rigorous regulations around AI.

Under the AI Act, AI tools will be categorized according to their level of risk, in order to deal with concerns around the dissemination of misleading information, discriminatory language and biometric surveillance, among others. If and when the act comes into law, any company that uses AI tools such as GPT-4 will be required to disclose the copyrighted material used in its development. The index could therefore prove to be a useful tool in ensuring compliance.

“What we’re trying to achieve with the index is to make models more transparent and disaggregate that very amorphous concept into more concrete matters that can be measured,” Bommasani said.

Although a vibrant open-source community has sprung up around generative AI, the work of the biggest companies in the industry remains shrouded in secrecy. OpenAI for example, isn’t nearly as open as its name suggests, having decided to stop distributing its research because of competition from its rivals and concerns over safety.

According to Bommasani, Stanford HAI intends to update the Foundation Model Transparency Index on an ongoing basis, and will expand its scope to include additional models.

THANK YOU